OpenAI has announced the launch of GPT-4 Turbo, an enhancement of the previously released GPT-4 model. The upgraded model boasts a 128K context window, which allows for a larger text prompt capacity, equivalent to over 300 pages of content.

OpenAI has also reduced the cost for both input and output tokens by 3x and 2x respectively, compared to its predecessor.

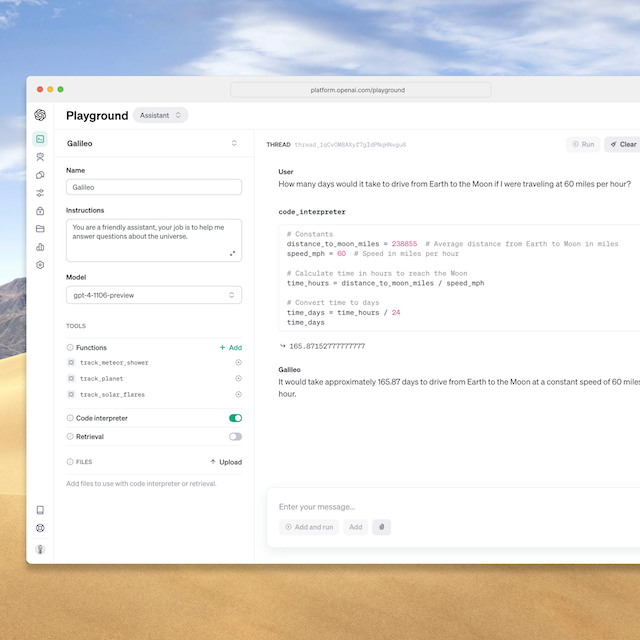

Developers can now test GPT-4 Turbo by utilizing the gpt-4-1106-preview in the API, with a stable version expected to release soon. Improvements in function calling are also part of the package, allowing for complex tasks like executing multiple commands within a single message.

GPT-4 Turbo has been designed to better understand and execute instructions, a feature complemented by the newly introduced JSON mode, says OpenAI. The mode guarantees JSON output, catering to the needs of developers working with Chat Completions API outside of function calling.

OpenAI is also introducing a ‘seed’ parameter for generating reproducible outputs, useful for debugging and testing. The addition of log probabilities for output tokens will assist developers in creating more interactive features like autocomplete in search functionalities.

Alongside GPT-4 Turbo, OpenAI has upgraded GPT-3.5 Turbo, which now supports a 16K context window and shows a 38% improvement in format following tasks. The new version can be accessed via the gpt-3.5-turbo-1106 API call, and will automatically replace the older model on December 11 for existing applications.

The Assistants API is a new release aimed at simplifying the creation of AI-powered applications. It encompasses the Code Interpreter and Retrieval features, as well as function calling to streamline app development.

GPT-4 Turbo now includes vision capabilities within the Chat Completions API, which can be accessed via gpt-4-vision-preview. This allows for image input, expanding its utility in real-world scenarios.

Developers can also integrate DALL·E 3 into their applications through the Images API. Meanwhile, the Text-to-Speech API now offers human-like speech synthesis with various voice options.

On the customization front, OpenAI is offering experimental access to GPT-4 fine-tuning and launching a Custom Models program for extensive model training.

Developers can begin exploring these new features and improvements, with beta access to the Assistants API now open to all.

You can find the OpenAI blog post with the announcements here.

[Image courtesy: OpenAI]